Archive for September 2020

OZONE DEPLETION PART-2

Posted on: September 30, 2020

SUMMARY: The overall structure of changes in total column ozone levels over a 50-year sample period from 1966 to 2015 and across a range of latitudes from -90 to +71 shows that the data from Antarctica prior to 1995 represent a peculiar outlier condition specific to that time and place and not a representation of long term trends in global mean ozone concentration. The finding is inconsistent with the Rowland-Molina theory of chemical ozone depletion and with the use of the periodic “ozone hole” condition at the South Pole as supporting evidence for this theory first proposed in Farman etal 1985. We conclude from this analysis that the Farman etal 1985 paper, a study of brief ozone anomalies at the South Pole that served to legitimize the ozone crisis and the rise of the UN as global environmental protection agency, is a fatally flawed study too constrained by time and space to have any implication for long term trends in global mean ozone concentration.

LIST OF POSTS ON OZONE DEPLETION: LINK: https://tambonthongchai.com/2021/03/31/list-of-posts-on-ozone-depletion/

KEY EVENTS IN THE GENESIS OF THE MONTREAL PROTOCOL AND THE RISE OF THE UN AS GLOBAL ENVIRONMENTAL PROTECTION AGENCY

In 1971, environmentalist James Lovelock studied the unrestricted release of halogenated hydrocarbons (HHC) into the atmosphere from their use as aerosol dispensers, fumigants, pesticides, and refrigerants and found HHC in the air in the middle of the Atlantic Ocean. He was concerned that these chemicals were man-made and they did not otherwise occur in nature and that they were chemically inert and that therefore their atmospheric release could cause irreversible accumulation. In a landmark 1973 paper by he presented the discovery that air samples above the Atlantic ocean far from human habitation contained measurable quantities of HHC. It established for the first time that environmental issues could be framed on a global scale and it served as the First of three Key Events that eventually led to the Montreal Protocol and its worldwide ban on the production, sale, and atmospheric release of HHC. However, since HHCs were non-toxic and, as of 1973, environmental science knew of no harmful effects of HHC, their accumulation in the atmosphere remained an academic curiosity.

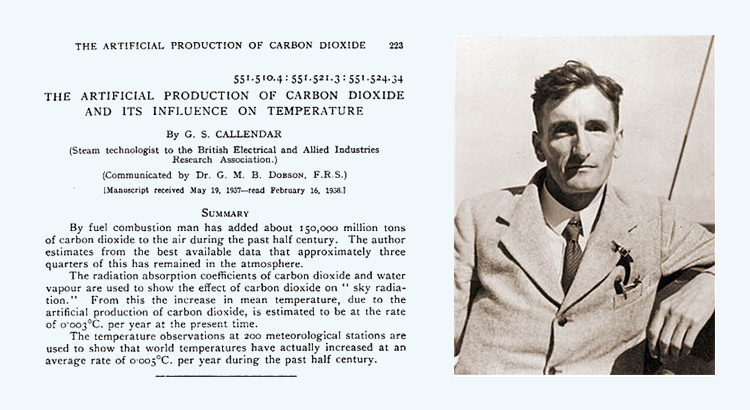

This situation changed in the following year with the publication of a paper by Mario Molina and Frank Rowland in which is contained the foundational theory of ozone depletion and the rationale for the Montreal Protocol’s plan to save the ozone layer.

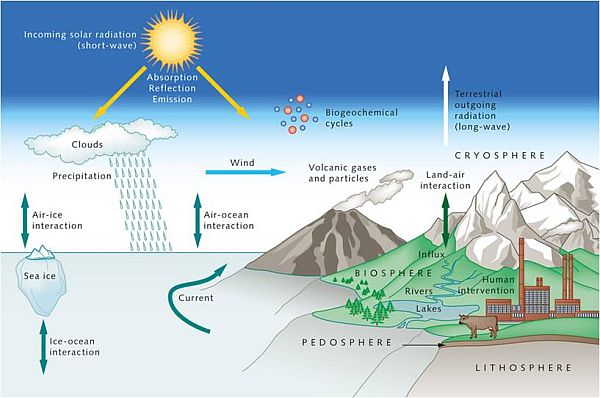

In the Rowland-Molina theory of ozone depletion (RMTOD), the extreme volatility and chemical inertness of the HHCs ensure that there is no natural sink for these chemicals in the troposphere and that therefore once emitted they may remain in the atmosphere for 40 to 150 years. They could then be transported by diffusion and atmospheric motion to the stratospheric ozone layer where they are subjected to solar radiation at frequencies that will cause them to dissociate into chlorine atoms and free radicals. Chlorine atoms can then act as a catalytic agent of ozone destruction in a chemical reaction cycle described in the paper and reproduced in Figure 1 below taken from the Rowland and Molina, 1974 paper.

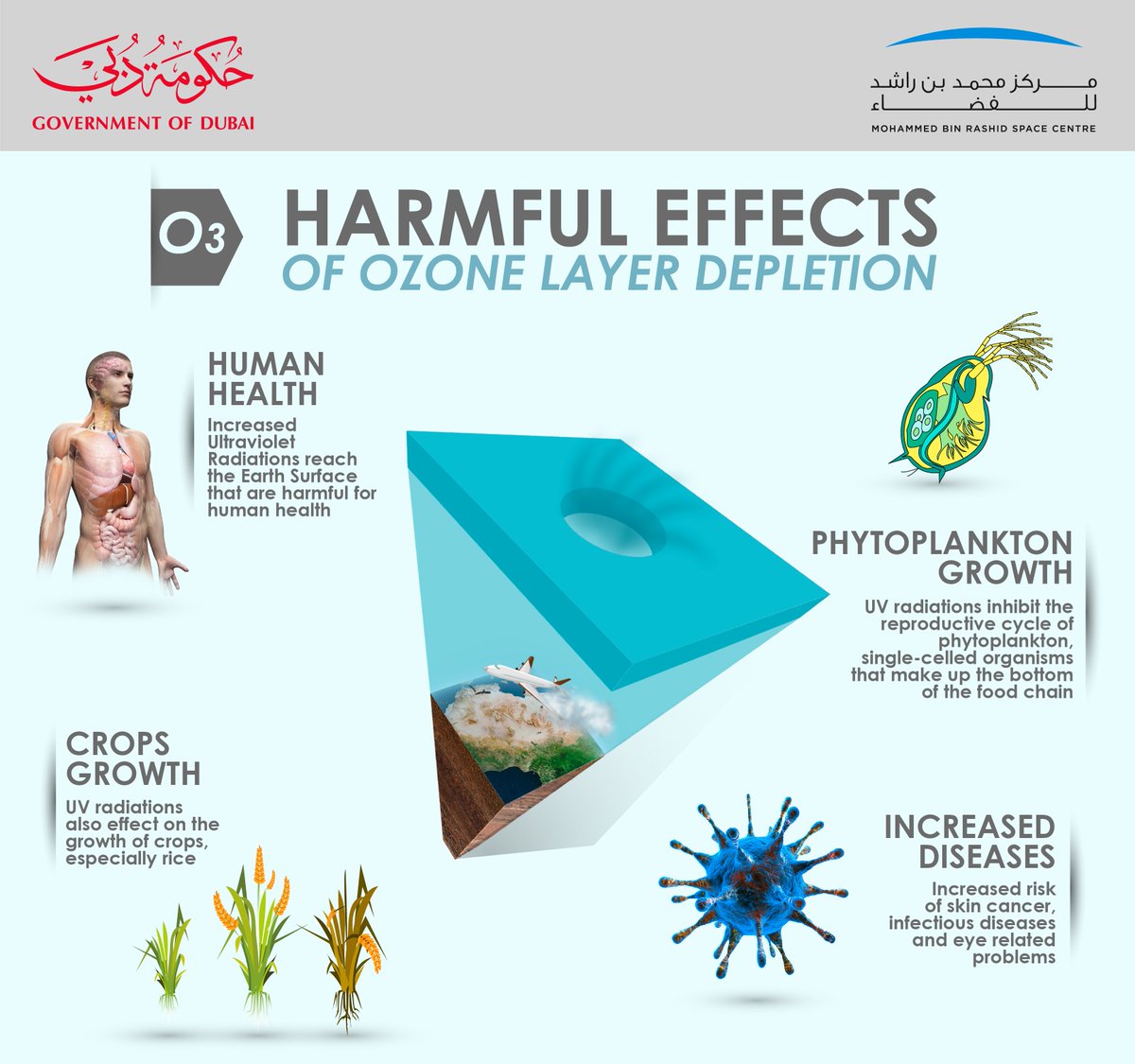

Ozone depletion poses a danger to life on earth because the ozone layer protects life on the surface of the earth from the harmful effects of UVB radiation. The Rowland-Molina paper, is the second key event

that led to the Montreal Protocol. It established that the atmospheric accumulation of HHC is not harmless and provided a theoretical framework that links HHC to ozone depletion that exposes life on the surface of the earth to the harmful impacts of UVB radiation. The Rowland-Molina paper is the Second Key Event that led to the Montreal Protocol. It established that the atmospheric accumulation of HHC is not harmless and provided a theoretical framework that links HHC to harmful ozone depletion.

The third key event in the genesis of the Montreal Protocol was the paper by Farman, Gardiner, and Shanklin that is taken as empirical evidence for the kind of ozone depletion described by the RMTOD (Farman, 1985). The essential finding of the Farman paper is contained in the top frame of the paper’s Figure 1 which is reproduced here as Figure 2 belos. Ignoring the very light lines in the top frame of Figure 2, we see two dark curves one darker than the other. The darker curve contains average daily values of total column ozone in Dobson units for the 5-year test period 1980-1984. The lighter curve shows daily averages for the 16-year reference period 1957-1973. The conclusions the authors drew from the graph are that (1) atmospheric ozone levels are lower in the test period than in the reference period and (2) that the difference is more dramatic in the two spring months of October and November than it is in the summer and fall. The difference and the seasonality of the difference between the two curves are

interpreted by the authors in terms of the ozone depletion chemistry and their kinetics described by Molina and Rowland (Molina, 1974). The Farman paper was thus hailed as empirical evidence of RMTOD and the science of ozone depletion due to the atmospheric release of HHC appeared to be well

established by these three key papers. First, atmospheric release of HHC caused them to accumulate in the atmosphere on a planetary scale because they are insoluble and chemically inert (Lovelock). Second, their long life and volatility ensure that they will end up in the stratosphere where HHC will be dissociated by radiation to release chlorine atoms which will act as catalytic agents of ozone depletion (Molina-Rowland). And third, the Farman etal 1985 paper provides the empirical evidence and validates the depletion of ozone and therefore the RMTOD. The Montreal Protocol was put in place on this basis. LINK TO RELATED POST ON FARMAN ETAL 1985: https://tambonthongchai.com/2019/03/12/ozone1966-2015/

WE MOVE NOW TO THE STUDY PRESENTED HERE THAT CAN BE DESCRIBED AS A CRITICAL EVALUATION OF THE WORKS OF ROWLAND AND MOLINA AND THOSE OF FARMAN ET AL.

DATA AND METHODS: Total column ozone (TCO) measurements made with Dobson spectrophotometers at ground stations are used in this study. Twelve stations are selected to represent a large range of latitudes. The selected stations, each identified with a three-character code, are listed below. The locations of these stations are described and identified with global coordinates. Ozone data from these stations are provided online by the NOAA /ESRL and by the British Antarctic Survey. Most stations provide daily mean values of total column ozone in Dobson units. The time span of the data ranges from 1957 to 2015. The first year of data available varies from station to station in the range of 1957 to 1987, and the last month from August 2013 to December 2015. Some months and some years in the span of measurements do not contain data for many of the stations. The core study period is somewhat arbitrarily defined as consisting of ten Lustra (5-year periods) from 1966 to 2015. The Farman etal 1985 paper provides a precedence for the use of changes in 5-year means in the evaluation of long term trends. The period definitions are not precise for the first and last Lustra. The first Lustrum is longer than five years for some stations and shorter than five years for others. The last Lustrum is imprecise because of the variability in the last month of data availability. The calendar month sequence is arranged from September to August in the tables and charts presented to maintain seasonal integrity. The seasons are roughly defined as follows: September-November (northern autumn and southern spring), December-February (northern winter and southern summer), March-May (northern spring and southern autumn), and June-August (northern summer and southern winter). Daily and intraday ozone data are averaged into monthly means for each period. These monthly means are then used to study trends across the ten Lustra for each calendar month and also to examine the average seasonal cycle for each Lustrum. Trends in mean monthly ozone and seasonal cycles are compared to examine the differences among latitudes. These patterns are then used to compare and evaluate the chemical and transport theories for changes in atmospheric ozone. The chemical explanation of these changes rests on the destruction of ozone by chlorine atoms derived from HHC (Molina, 1974) while the transport theory describes them in terms of the Brewer-Dobson circulation (BDC) and polar vortices that transport ozone from the tropics where they are formed to the greater latitudes where they are more stable.

TABLE 1: LIST OF STATIONS

DATA ANALYSIS STATION BY STATION: AMS SOUTH POLE ANTARCTICA

The data for AMS are summarized above. The first panel shows the number of observations reported in the dataset for each month of each Lustrum. We see in this panel that data are sparse for the months of September and March. The second panel contains the average value of total column ozone in Dobson Units (DU) for each month of each Lustrum. The columns in this panel represent long term trends for each month across the ten Lustra and the rows represent the average seasonal cycle in each Lustrum across the twelve calendar months. These trends and seasonal cycles are depicted graphically below.

Visually, Figure 3 indicates that the most extreme gradients in long term trends and the most extreme differences in ozone levels among months are seen in the southern spring months of September, October, and November. In the month of October ozone levels declined steeply losing more than 127from Lustrum#2 (266 DU) to Lustrum#6. A similar long term decline is seen for November where the decline persists from Lustrum#1 (344 DU) to Lustrum#9 (173 DU). In both of these months total column ozone at AMS fell to levels well below the arbitrary threshold of 200 DU where

atmospheric ozone concentration is described as an “ozone hole“. September data, though patchy, appear to mirror the October decline. The December decline is not as steep as those in October and November. In addition, the the data show large month to month differences in ozone levels among the

spring months exceeding 50 DU. For the rest of the year, ozone levels are

generally flat at about 280 DU throughout the study period with only small differences among the Lustra and among the months. A gradual decline in ozone levels from 280 DU to 240 DU is evident in the in the era prior to 1996 from Lustrum#1 to Lustrum#6. The ozone level appears to be stable in the post 1996 era at above 230 DU. The ozone hole appears to be a seasonal phenomenon peculiar to the spring month of October.

The average seasonal cycle for each Lustrum in Figure 4 shows ozone levels from September to August. In general, the ozone level tends to fall to its lowest level of well below 200 DU in October and then to rise sharply during November and December to above 300 DU with a gradual decline thereafter to 230 DU in late winter (August) before sinking back into ozone hole conditions in spring (October). The range of the seasonal cycle is about 120 DU. The seasonal cycle differs greatly among the Lustra prior to 1991

(left panel of Figure 4) but these differences appear to have narrowed since then (right panel of Figure 4.

HLB HALLEY BAY, ANTARCTICA.

The data for HLB are summarized in Table 4 above. The first panel shows that data are sparse for the months of May, June, and July. The seasonal cycle for each Lustrum and the long term trend for each month across the Lustra derived from Table 4 are depicted graphically in Figures 5&6. In Figure 5 the spring months (September, October, November) show a significant decline in ozone levels from Lustrum#1 to Lustra #6 and #7 with large differences in ozone concentration among the months. Mean October ozone levels fell almost 170 DU from Lustrum#1 (300 DU) to Lustrum#6 (132

DU). In the same period November ozone levels fell 150 DU. Similar magnitudes of decline are seen in the spring months of September (140 DU) and December (80 DU) and in the winter month of August (90 DU).

These data are historically important because the decline in October and November ozone levels by 80 DU or more from Lustrum#2 to Lustrum#4 reported by Farman et al (Farman, 1985) first alerted the world to what was thought to be catastrophic anthropogenic ozone depletion and served to validate the chemical theory of ozone depletion (RMTOD) attributed to HHC emissions (Molina, 1974). These data are therefore the proximate cause that triggered the Montreal Protocol of 1987 and its worldwide ban on HHC. The banned chemicals are described in the Protocol as ozone depleting substances.

In addition, the left panel of Figure 5 shows large differences in ozone concentration among the months that vary in the long term across the Lustra. The range of monthly values doubles from 75 DU in Lustrum#1 to 150 DU in Lustrum#6 before shrinking back to 90 DU in Lustrum#10. However, the great differences among months seen in the spring are mostly absent in the summer months of December, January, and February where we see only a modest decline in ozone levels with differences among the months and the rate of decline eroding with time across the Lustra. The data also show that ozone levels appear to have stabilized since Lustrum#6. Figure 6 shows that, as in AMS, the September to August seasonal cycle shows a steep rise during the southern spring months of October and November with a gradual decline during summer, autumn, and winter. Also in common with AMS are that large differences in ozone concentration among the Lustra are seen only in the seasonal cycles prior to 1995. These differences are greatly reduced in the period since 1995. Taken together, the data do not indicate that the sharp decline reported by Farman for the period Lustrum#2 to Lustrum#4 can be generalized as a phenomenon across the sample period.

LDR: Lauder, New Zealand

Total column ozone data from LDR are available for a relatively short period. Data for the first four Lustra are not available and the fifth and tenth Lustra are abbreviated. Unlike the Antarctica data, the graphical display of the LDR data in Figure 7 does not show a trend in ozone concentration for any calendar month. Also the lowest levels of ozone at LDR are generally higher than those at the Antarctica stations by about 100 DU. Yet another distinction from Antarctica is that the seasonal cycles for all Lustra in the dataset appear to converge into a single coherent pattern shown in Figure 8. In an 80 DU seasonal cycle, the ozone level is highest in the southern spring month of October at about 350 DU falling to a low of 270 in March. This pattern is the exact reverse of the seasonal cycle in Antarctica.

PTH: Perth Australia

Total column ozone data for PTH are displayed in Table 6 and in Figures 9&10. No long term trend in the ozone levels is apparent for any calendar month. A 50 DU seasonal cycle shows a high of 320 DU in the southern spring falling to a low of 270 DU in summer – in sync with LDR but shallower.

SMO: American Samoa

The data for SMO show a steady ozone level of approximately 250 DU with a standard deviation of 6 DU for all months of all Lustra. There is no evidence of trends. The seasonal cycle is almost flat.

MLO: Mauna Loa Hawaii

MLO ozone data show no trends across the ten Lustra. A very shallow 40 DU seasonal cycle fluctuates from a low of 240 DU in January to a high of 280 DU in May for all Lustra. The seasonal cycle is not in sync with those observed in the southern hemisphere.

WAI Wallops Island

Total column ozone data at WAI contain no apparent long term trend. They show a seasonal cycle with an amplitude of 70 DU running from a low of 280 DU in October to a high of 350 in April.

BDR: Boulder Colorado

Total column ozone data from the BDR station show no long term trends. An 80DU seasonal cycle rises from 270DU in October to 350DU in April similar to the seasonal cycle at WAI.

CAR: Caribou Maine

The data show a modest decline in ozone levels at CAR in the month of December at a rate of about 5DU per Lustrum on average. A 100DU seasonal cycle runs from a low of around 300DU in October to a high of 400DU in February. The seasonal cycle is fairly uniform across the ten Lustra.

BIS: Bismark, South Dakota

There are no sustained trends in the ozone data for BIS although a modest decline in the range of 2 to 4DU per Lustrum on average is seen in the months of March and April. A 100DU seasonal cycle runs from a low of 280DU in October to a high of 380DU in March. The seasonal cycle is fairly uniform for the ten Lustra and in sync with the seasonal cycle observed in BIS and in Antarctica.

FBK: Fairbanks, Alaska

Total column ozone data from FBK summarized in Table 13 show missing data for Lustrum#3 and for the northern fall and winter months of November, December, and January. The graphical depiction of the long term trends for each month (Figure 23) and for the average seasonal cycle for each Lustrum (Figure 24) contain gaps corresponding to the missing data. Still it is possible to discern in these graphs the absence of patterns or trends in ozone levels. In fact we find comparatively high ozone levels in the range of 300DU to 400DU corresponding to a 100DU seasonal cycle that goes from a low of less than 300DU in September to a high above 400DU in March. The seasonal cycle is in sync with those observed at CAR, BIS, and in Antarctica.

BRW: Barrow Alaska

Ozone levels are generally high compared with those in the lower latitudes and in the southern hemisphere. A 100DU seasonal cycle is evident in Figure 26 with the ozone level rising from a low of 300DU in September and October to a high of over 400DU in March and April. The seasonal cycle is similar to the ones observed in Antarctica and in the higher northern latitudes. No long term trends are evident.

SUMMARY AND COMPARISONS

The annual cycle in total column ozone at different latitudes

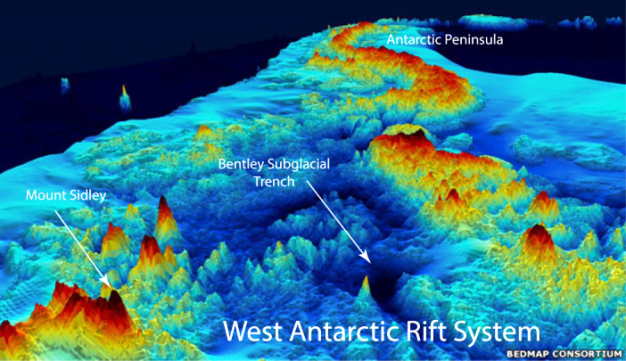

Figure 27 shows that the range of observed ozone levels is a strong function of latitude. It reaches a minimum of about 20DU in the tropics and increases asymmetrically toward the two poles. The hemispheric asymmetry has two dimensions. The northward increase in range is gradual and the southward increase in range is steep. Also, the northward increase in range is achieved mostly with rising maximum values while southward increase in range is achieved mostly with falling minimum values. The midpoint between the HIGH and LOW values is symmetrical within ±45 from the equator but diverges sharply beyond 45 with the northern leg continuing to rise while the southern leg changes to a steep decline as seen in Figure 28. Hemispheric asymmetry in atmospheric circulation patterns is well known (Butchart, 2014) (Smith, 2014) and the corresponding asymmetry in ozone levels is also recognized (Crook, 2008) (Tegtmeier, 2008) (Pan, 1997). These asymmetries are also evident when comparing seasonal cycles among the ground stations (Figure 29). The observed asymmetries are attributed to differences in land-water patterns in the two hemispheres with specific reference to the existence of a large ice covered land mass in the South Pole (Oppenheimer, 1998) (Kang, 2010) (Turner, 2009). The climactic uniqueness of Antarctica is widely recognized (Munshi, Mass Loss in the Greenland and Antarctica Ice Sheets, 2015) (NASA, 2016) (NASA, 2015).

The left panel of Figure 30 represents the southern hemisphere from AMS (-90o) to SMO (-14o). The right panel represents the northern hemisphere from MLO (+19.5o) to BRW (+71o). The x-axis in each panel indicates the calendar months of the year from September = 1 to August = 12. The ordinate measures the average rate of change in total column ozone for each calendar month among adjacent Lustra for all Lustra estimated using OLS regression of mean total column ozone against Lustrum number for each month. For example, in the left panel we see that in the month of September

(x=1) ozone levels at HLB (shown in red) fell at an average rate of 15DU per Lustrum for the entire study period; and in the right panel we see that in the month of July (x=11) ozone levels at FBK (shown in orange) rose at an average rate of more than 2DU per Lustrum over the entire study period. The full study period is 50 years divided into 10 Lustra but it is abbreviated for some stations according to data availability.

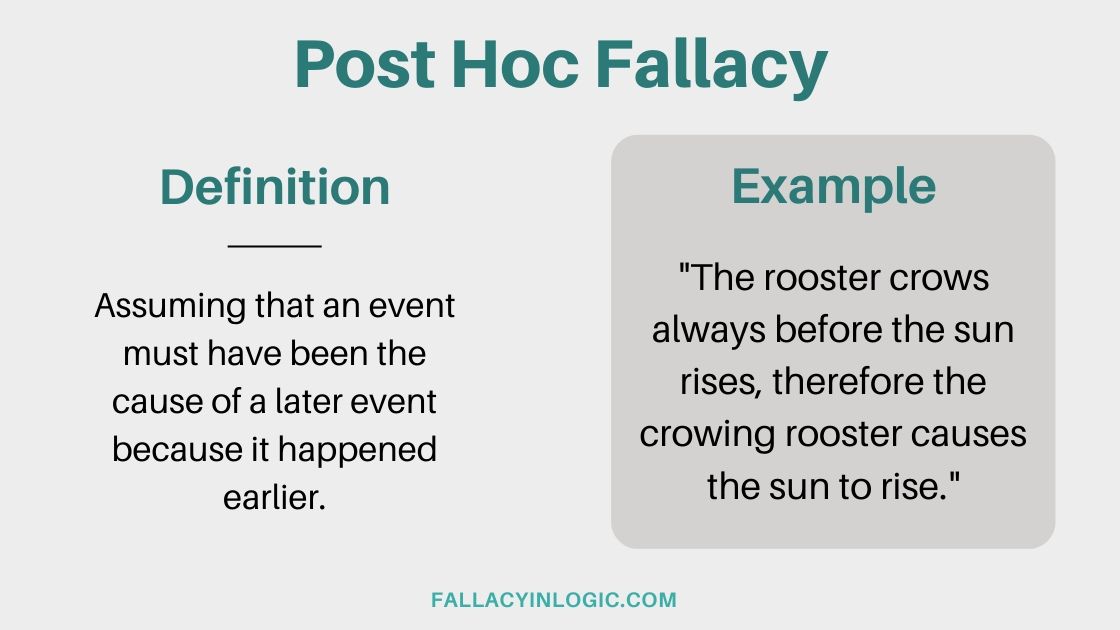

The concern about ozone depletion is derived from the finding by Farman et al in 1985 that ozone levels at HLB fell more than 100DU from the average value for October in 1957-1973 to the average value for October in 1980-1984. In comparison, changes of ±5DU from Lustrum to Lustrum seem inconsequential. In that light, and somewhat arbitrarily if we describe ±5DU per Lustrum as insignificant and perhaps representative of random natural variability, what we see in Figure 30 is that, except for the two Antarctica stations (AMS and HLB), no average change in monthly mean ozone from Lustrum to Lustrum falls outside this range.

It is therefore not likely that the HLB data reported by Farman et al can be generalized globally. We conclude from this analysis that the Farman etal study, the only empirical evidence presented in the ozone depletion study and thought to validate the Rowland Molina theory of ozone depletion, is flawed and therefore it does not serve as evidence of anthropogenic ozone depletion.

And yet, Farman etal 1985 served and still serves to this day as the only empirical evidence for the ozone crisis that created the role for the UN in global environmentalism.

LIST OF POSTS ON OZONE DEPLETION: LINK: https://tambonthongchai.com/2021/03/31/list-of-posts-on-ozone-depletion/

OZONE DEPLETION: PART-1

Posted on: September 30, 2020

THIS POST IS A STUDY OF TRENDS IN STRATOSPHERIC OZONE CONCENTRATION 1979-2015 FROM SATELLITE DATA AND A TEST OF THE ROWLAND MOLINA THEORY OF ANTHROPOGENIC CHEMICAL OZONE DEPLETION DESCRIBED IN FARMAN ETAL 1985 AND THE MONTREAL PROTOCOL.

SUMMARY: Mean global total ozone is estimated as the latitudinally weighted average of total ozone measured by the TOMS and OMI satellite mounted ozone measurement devices for the periods 1979-1992 and 2005-2015 respectively. The TOMS dataset shows an OLS depletion rate of 0.65 DU per year on average in mean monthly ozone from January 1979 to December 1992. The OMI dataset shows an OLS accretion rate of 0.5 DU per year on average in mean monthly ozone from January 2005 to December 2015. The conflicting and inconsequential OLS trends may be explained in terms of the random variability of nature and violations of OLS assumptions that can create the so called Hurst phenomenon. These findings are inconsistent with the Rowland-Molina theory of ozone depletion by anthropogenic chemical agents because the theory implies continued and dangerous depletion of total ozone on a global scale until the year 2040.

POLICY IMPLICATION: THE APPARENT MONTREAL PROTOCOL SUCCESS THAT VAULTED THE UNITED NATIONS INTO A GLOBAL ROLE IN CLIMATE CHANGE HAS NO SUPPORTING EVIDENCE. IT SHOULD ALSO BE MENTIONED THAT THERE IS NO ROLE FOR THE OZONE HOLE IN THE ROWLAND MOLINA THEORY OF OZONE DEPLETION. THE OZONE HOLE IS A LOCALIZED EVENT. THE ROWLAND MOLINA THEORY OF OZONE DEPLETION RELATES ONLY TO LONG TERM TRENDS IN GLOBAL MEAN OZONE LEVEL. NO SUCH TREND HAS EVER BEEN PRESENTED AS EVIDENCE PROBABLY BECAUSE NO SUCH TREND IS FOUND IN THE DATA. THE OZONE DEPLETION CRISIS AND ITS MONTREAL PROTOCOL SOLUTION APPEARS TO BE AN IMAGINED CRISIS THAT WAS SIMPLY DECLARED TO HAVE BEEN SOLVED.

THE OZONE DEPLETION ISSUE: Atmospheric ozone plays an important role in protecting life on the surface of the earth from the harmful effects of UV-B radiation. The mechanism of this protection involves the Chapman Cycle that both forms and destroys ozone (Chapman, 1930) (Fisk, 1934). The essential reaction equilibrium between oxygen molecules (O2) and ozone molecules (O3) is 3O2↔2O3. In the absence of UVB (280-315 nm) and UVC (100-280 nm) radiation, O3 concentration is negligible and undetectable because the equilibrium heavily favors O2. In the presence of UVC, the equilibrium shifts towards O3 because UVC disintegrates O2 into charged oxygen free radicals. Their chance collision with O2 forms ozone and that with O3 destroys ozone. The much higher probability of collision with O2 favors an equilibrium inventory of O3. In the presence of both UVC and UVB, as in the ozone layer, the equilibrium shifts back towards O2 because UVB destroys O3 and lowers the equilibrium concentration of ozone. In this process UVB is almost completely absorbed and life as we know it on the surface of the earth is protected from the known harmful effects of UVB that include increased incidence of skin cancer (McDonald, 1971) (UNEP, 2000) and adverse effects on photosynthesis in plants (Allen, 1998) (Tevini, 1989). The importance of the ozone layer and environmental concerns with respect to man-made chemicals that may cause ozone depletion are understood in this context (Molina, 1974) (UNEP, 2000). Stratospheric ozone forms over the tropics where UVB irradiance is direct. It is distributed to the higher latitudes primarily by the Brewer-Dobson Circulation or BDC (Brewer, 1049) (Dobson, 1956). Mid latitude ozone concentration tends to be higher than that over the tropics partly because stratospheric ozone is more stable when UVB irradiance is at an inclination. The distribution of ozone to the extreme polar latitudes is asymmetrical and less efficient than that to the mid-latitudes (Kozubek, 2012) (Tegtmeier, 2008) (Weber, 2011). The high level of interest in atmospheric ozone today derives from the discovery in 1971 of a global distribution of synthetic halogenated hydrocarbons in the atmosphere (Lovelock, 1973) and the analysis of its implications by Molina and Rowland in terms of the ability of chlorine free-radicals from synthetic halogenated hydrocarbon to catalyze the destruction of ozone in the stratosphere (Molina, 1974). The discovery in 1985 that the mean monthly atmospheric ozone over the South Pole for the period 1980-1984 was dramatically lower than that in the period 1957-1973 (Farman, 1985) served as empirical evidence of the Rowland-Molina ozone depletion mechanism. A fatal flaw in the Farman 1985 paper is described in a related post: LINK: https://tambonthongchai.com/2019/03/12/ozone1966-2015/ Critical review and commentary provided by Kirk P. Fletcher and Mhehed Zherting helped to improve this presentation and they are greatly appreciated. .

THE DATA AND DATA ANALYSIS: Satellite based measurements of total ozone began in late 1978 with the Total Ozone Mapping Spectrometer (TOMS) aboard the Nimbus-7 and continued on other spacecraft after 1992 (NASA, 1992) (NASA, 2015) but with some deterioration in the quality of the data since 1993 and particularly after the year 2000 due to instrument degradation (Ziemke, 2011) (NASA GISS, 2015). However, very high quality daily gridded total ozone data have been generated since 2005 on board the Aura satellite program by the Ozone Monitoring Instrument (OMI) provided by the Netherlands’s Agency for Aerospace Programs (NIVR) in collaboration with the Finnish Meteorological Institute (FMI) (NASA, 2015) (McPeters, 2015) (Ziemke, 2011) (NLR, 2016).

The OMI data provide complete global coverage in one-degree steps in both longitude and latitude on a daily basis. However, there are large gaps in the data particularly in the in the extreme latitudes during their respective winter months. For the purpose of our analysis these data are averaged across all longitudes in each one-degree latitude step for all 4,086 days in the sample period 1/1/2005 to 3/10/2016. Analysis of data availability across latitudes shows 100% data availability between 57S and 71N latitudes but that data are sparse in the more extreme latitudes particularly in the Southern Hemisphere. Gaps in the polar data are patterned and not random because they occur only in the respective polar winters. It is possible for this pattern to impose a bias in the results.

Data from TOMS (Total Ozone Mapping Spectrometer) satellite mounted instruments for the measurement of atmospheric ozone are maintained and made available online by the NASA Goddard Institute for Space Studies (NASA GISS, 2015) (Ziemke, 2011) (NASA, 2011) (NASA TOMS ARCHIVE, 1992). These data are available as gridded monthly means for the period 1979-1992 in one by 1.25-degree grids for all latitudes and longitudes. There are a total of 170 months in the data series. As in the OMI data (and in ground station data) the extreme latitude data are mostly absent during their respective winters. A comparison TOMS, OMI, and ground station data reveals some data anomalies. TOMS and OMI data are not comparable because they are very different from each other. Also, both TOMS and OMI are different from ground station data. Therefore, the two sample periods are tested for trends in mean monthly global ozone separately. Ground station data are presented in a related post on this site. Trends are estimated using simple OLS regression of monthly means against time. A deseasonalized series is not used for this purpose because the seasonal cycles of monthly means are irregular when defined in terms of calendar months. Imposing a common seasonal cycle on these data based on calendar months may impose a bias (Box, 1994) (Draper&Smith, 1998).

The Hurst Exponent of these time series: The second sample period, containing more than 4,000 days of gridded daily means, provides a sufficient sample size to use Rescaled Range analysis (R/S) to estimate the Hurst exponent H of daily mean global ozone (Hurst, 1951) (Mandelbrot-Wallis, 1969). R/S requires subsampling without replacement in multiple cycles. We use seven cycles of subsampling with 1, 2, 3, 4, 6, 8, and 10 subsamples taken in the seven cycles. Thus a total of 34 subsamples are subjected to R/S analysis. In each subsample, the range is computed as the difference between the maximum value and the minimum value of the cumulative differences from the mean. The value of R/S is computed as the range R divided by the standard deviation of the subsample S. The H-value for each subsample is then computed as H = ln(R/S)/ln(N) where N is the subsample size. The Hurst exponent of the time series is then estimated as the simple arithmetic average of all 34 values of H2. The value of H indicates whether the usual independence assumption of OLS regression is violated. Under conditions of independence and Gaussian random behavior in the time series the theoretical value of the Hurst exponent is H=0.50. Gaussian and independence assumptions are not seriously violated if the Hurst exponent lies between 0.40 and 0.6 but higher values indicate long term memory and persistence. Under these conditions random numbers can appear to form patterns and faux statistically significant trends. However, sample size and the sub-sampling structure used in Rescaled Range analysis can impose a bias in the value of H computed. It is therefore necessary to “calibrate” the subsample structure with a known Gaussian series. The Hurst exponent of the test series must then be compared with the value of H computed for the Gaussian series in the calibration set under identical estimation procedures and subsampling conditions. Once the Hurst exponent of the latitudinally averaged global ozone time series is determined it will be possible to evaluate the validity of OLS trends particularly when they appear over short periods of time and when they are not robust to changes in the time frame. Memory and persistence in a time series is known to be the source of chaotic behavior in time series data that appears to create patterns out of randomness. The usual research procedure of looking for cause and effect explanations for observed patterns in the data can go awry under these conditions.

DATA ANALYSIS AND RESULTS FOR TOMS NIMBUS7 DATA:

The monthly mean gridded total ozone data from archives of the now de-commissioned TOMS Nimbus7 program are converted into latitudinally weighted global means and these global means are depicted graphically in Figure 5. The data show that the seasonal cycle is irregular when described in terms of calendar months. The monthly means show a gradual decline in mean global total ozone at a rate of 0.0542 DU per month or 0.65 DU per year. The observed rate of decline is inconsequential in the ozone depletion context. Though statistically significant, the practically insignificance of the decline is in sharp contrast to the claim of a catastrophic anthropogenic destruction of the ozone layer and its dangerous consequences including an alleged epidemic of skin cancer. Also, the ozone time series may violate the independence assumption of OLS regression and the Hurst phenomenon in the ozone time series can create apparent patterns out of randomness that may be mistaken for trends. We should also take note that that the TOMS/Nimbus program has been decommissioned and that the OMI/Aura ozone measurement program that was started in 2005 and which continues to this day (2016) is considered to provide much better measurements of total ozone than the discontinued TOMS/Nimbus program. The mean meridional pattern of mean monthly ozone for 1979-1992 in the TOMS dataset shows the efficiency of ozone distribution by atmospheric circulation. In the tropics, the only place where ozone is formed, the average ozone concentration is about 280 DU. At the mid-latitudes ozone concentration is higher – as high as 370 DU in the Northern Hemisphere and 340 DU in the Southern Hemisphere – because at these latitudes UVB irradiance is at an inclination and it is therefore less efficient in destroying ozone. At the extreme latitudes, the ozone level drops because atmospheric circulations are less efficient in distributing ozone to these latitudes. The distributional efficiency to the extreme latitudes is asymmetrical and is less efficient in the Sothern Hemisphere than in the Northern Hemisphere. Changes in ozone levels at these extreme latitudes – both seasonal and decadal – are therefore more likely to be the result of natural variations in atmospheric circulations than ozone destruction by anthropogenic chemical agents. The implication of these patterns is that polar “ozone holes” cannot serve as evidence of ozone depletion.

DATA ANALYSIS AND RESULTS FOR THE OMI DATA:

The OMI gridded daily total ozone data are smoothed and converted into latitudinally weighted global means with cosine weighting. These daily global means are depicted graphically in Figure 7. Smoothing was necessary because the raw data contain spikes that occur irregularly about once a month. Each spike consists of two anomalous values in adjacent days- one high and one low. The left panel of Figure 8 shows these spikes for the year 2005. They occur in all years. These spikes are assumed to be anomalous and they are removed by replacing them with the mean value for day-7 to day-2. The data shown in Figure 7 are the smoothed values. The right panel of Figure 8 shows the average meridional pattern in the data from the South Pole to the North Pole. There is a trough of about 280 DU in the tropics with higher values in the mid-latitudes that drop again as we enter the Polar Regions. The drop is more severe in the South Pole than in the North Pole. The graphic indicates the extreme asymmetry between the two hemispheres and the uniqueness of the South Pole in terms of atmospheric total ozone and supports the findings in a prior study that ozone behavior in the South Pole cannot be generalized on a global scale (Munshi, An empirical test of the chemical theory of ozone depletion, 2016).

For ease of comparison with the TOMS mean monthly data series 1979-1992, the OMI daily data in Figure 7 are converted into monthly means from January 2005 to December 2015. The monthly means and their OLS trend are shown in Figure 9 below. OLS regression shows a rising trend of 0.0416 DU per month or about 0.5 DU per year. Though statistically significant this trend is of little practical consequence and is more likely to be the result of natural variability than the implementation of the Montreal Protocol particularly since the effect of the Protocol’s ban on ozone depleting substances is not expected for many decades to come because of the long life halogenated hydrocarbons in the atmosphere; and the size and direction of this trend is almost the exact opposite of the OLS trend observed in the 14-year period from 1979-1992 where we found global ozone declining at a rate of 0.65 DU per year. Yet, both of these study periods fall in a regime in which the Rowland-Molina theory predicts continued and sustained ozone depletion by long-lived anthropogenic ozone depleting substances.

CHAOTIC BEHAVIOR OF THE OMI DAILY DATA TIME SERIES: A a possible explanation for the apparent contradiction in the OLS trends observed in the two data series is chaotic behavior of the time series. Here we look at the Hurst exponent of the daily global ozone series 2005-2015. If it turns out that the series contains memory and persistence and that it therefore violates the OLS assumption of independence we would expect the random behavior of the series to generate faux patterns of this nature. The deseasonalized and detrended standardized residuals of the OMI daily data in Figure 7 are shown in Figure 10 below. They are examined with Rescaled Range analysis. A total of 34 sub-samples are taken in 7 cycles. Subsampling is without replacement in each cycle. The Hurst exponent of the deseasonalized and detrended residuals of the latitudinally weighted daily mean global ozone series 2005-2015 is found to be H=0.784, a high value much greater than H=0.5 indicative of memory and persistence in the series. However, it is known that empirical values of H cannot be compared directly with the theoretical Gaussian value of H=0.5 because of the effect of the subsampling strategy on the empirical value of H. It is necessary to perform a calibration with a Gaussian series using the same sample size and subsampling strategy for comparison. The calibration test with a random Gaussian series inserted into the same sub-sampling structure yielded a Hurst exponent of H=0.5217. The comparison of this neutral value with H=0.784 provides strong evidence of the existence of memory, dependence, persistence, and therefore of chaotic behavior in the daily mean global ozone time series. This behavior is depicted graphically below.

CONCLUSION: Satellite based total ozone gridded data from the TOMS instrument (1979-1992) and the OMI instrument (2005-2015) are used to estimate latitudinally weighted global mean ozone levels. The global mean ozone values are found to have a regular seasonal cycle for daily data and irregular seasonal cycles for monthly mean data. The monthly mean data are examined for trends with OLS regression. In both datasets, statistically significant but practically insignificant trends are found that are contradictory. The older TOMS data show a depletion of mean monthly global ozone at a rate of 0.65 DU3 per year. The newer and possibly more reliable OMI data show an accretion of mean monthly global ozone at a rate of 0.5 DU per year. According to the chemical theory of ozone depletion subsumed by the UNEP and the Montreal Protocol, both of the sample periods tested lie within a regime of continuous destruction of total ozone on a global scale by long lived anthropogenic chemical agents. The weak and contradictory OLS trends found in this study cannot be explained in terms of this theory. The OLS assumption of independence is investigated with Rescaled Range analysis. It is found that the deseasonalized and detrended standardized residuals of daily mean global ozone levels in the OMI dataset 2005-2015 contain a high value of the Hurst exponent indicative of dependence, persistence, and long term memory.

The weak and contradictory OLS trends observed in the TOMS and OMI datasets can therefore be explained as artifacts of the Hurst phenomenon which is known to create apparent patterns and OLS trends out of randomness. These results are inconsistent with the Rowland-Molina theory of anthropogenic ozone depletion on which the Montreal Protocol is based.

POLICY IMPLICATION: THE APPARENT MONTREAL PROTOCOL SUCCESS THAT VAULTED THE UNITED NATIONS INTO A GLOBAL ROLE IN CLIMATE CHANGE HAS NO SUPPORTING EVIDENCE. IT SHOULD ALSO BE MENTIONED THAT THERE IS NO ROLE FOR THE OZONE HOLE IN THE ROWLAND MOLINA THEORY OF OZONE DEPLETION. THE OZONE HOLE IS A LOCALIZED EVENT. THE ROWLAND MOLINA THEORY OF OZONE DEPLETION RELATES ONLY TO LONG TERM TRENDS IN GLOBAL MEAN OZONE LEVEL. NO SUCH TREND HAS EVER BEEN PRESENTED AS EVIDENCE PROBABLY BECAUSE NO SUCH TREND IS FOUND IN THE DATA. THE OZONE DEPLETION CRISIS AND ITS MONTREAL PROTOCOL SOLUTION APPEARS TO BE AN IMAGINED CRISIS THAT WAS SIMPLY DECLARED TO HAVE BEEN SOLVED.

BEAR HUNTING

Posted on: September 29, 2020

THIS POST IS A CRITICAL COMMENTARY ON THE CLIMATE SCIENCE ASSUMPTION IN POLAR BEAR RESEARCH THAT OBSERVED CHANGES IN POLAR BEAR COUNTS AND PHYSICAL CONDITIONS OVER DECADAL TIME SCALES CAN BE UNDERSTOOD IN TERMS OF REDUCED SEA ICE EXTENT AND THEREFORE IN TERMS OF CLIMATE CHANGE WITH THE IMPLICATION THAT WE CAN SAVE POLAR BEARS BY TAKING CLIMATE ACTION.

SUMMARY: Whether the polar bears are in trouble is not the issue. The only issue is whether their trouble if any is caused by fossil fuel emissions and whether it can be moderated by taking climate action. This important aspect of the polar bear issue in climate science is missing from polar bear research carried out by climate science because these relationships are assumed into the research question and methodology as well as in the interpretation of results. Such research is not carried out to seek the relevant information but rather to provide the needed motivation for climate action in a campaign against fossil fuels. The research methods impose confirmation bias into the findings such that they have no interpretation or context outside of the climate change assumptions built into the research methodology.

Life on earth is a struggle for survival for all species in an evolutionary dynamic of specie extinctions and creations. It is not something that needs to be fixed by humans and not something that can be fixed by giving up fossil fuels.

PART-1: THE VIEW FROM CLIMATE SCIENCE AND THE MEDIA

- Fasting season length sets temporal limits for global polar bear persistence. Péter K. Molnár ETAL, Nature Climate Change volume 10, (2020): Abstract: Polar bears require sea ice for capturing seals and are expected to decline range-wide as global warming and sea-ice loss continue. Estimating when different subpopulations will likely begin to decline has not been possible to date because data linking ice availability to demographic performance are unavailable for most subpopulations and unobtainable a priori for the projected but yet-to-be-observed low ice extremes. Here, we establish the likely nature, timing and order of future demographic impacts by estimating the threshold numbers of days that polar bears can fast before cub recruitment and/or adult survival are impacted and decline rapidly. Intersecting these fasting impact thresholds with projected numbers of ice-free days, estimated from a large ensemble of an Earth system models, reveals when demographic impacts will likely occur in different subpopulations across the Arctic. Our model captures demographic trends observed during 1979–2016, showing that recruitment and survival impact thresholds may already have been exceeded in some subpopulations. It also suggests that, with high greenhouse gas emissions, steeply declining reproduction and survival will jeopardize the persistence of all but a few high-Arctic subpopulations by 2100. Moderate emissions mitigation prolongs persistence but is unlikely to prevent some subpopulation extirpations within this century.

- NEW YORK TIMES: https://www.nytimes.com/2020/07/20/climate/polar-bear-extinction.html July 20, 2020: CITING THE MOLNAR PAPER: Polar bears could become nearly extinct by the end of the century as a result of shrinking sea ice in the Arctic if global warming continues unabated. Nearly all of the 19 subpopulations of polar bears, from the Beaufort Sea off Alaska to the Siberian Arctic, would face being wiped out because the loss of sea ice would force the animals onto land and away from their food supplies for longer periods. Prolonged fasting, and reduced nursing of cubs by mothers, would lead to rapid declines in reproduction and survival.

- There are about 25,000 polar bears in the Arctic. Their main habitat is sea ice, where they hunt seals by waiting for them to surface at holes in the ice. In some areas the bears remain on the ice year round, but in others the melting in spring and summer forces them to come ashore. They need the sea ice to capture their food. There’s not enough food on land to sustain a polar bear population. But bears can fast for months, (8 months). Arctic sea ice grows in the winter and melts and retreats in spring and summer. As the region has warmed rapidly in recent decades, sea ice extent in summer has declined by about 13 percent per decade compared to the 1981-2010 average. Some parts of the Arctic that previously had ice year-round now have ice-free periods in summer. Other parts are now free of ice for a longer portion of the year than in the past. The Molnar paper studied 13 of the subpopulations equal to 80 percent of the total bear population. They calculated the bears’ energy requirements in order to determine how long they could survive or, in the case of females, survive and nurse their cubs while fasting. Combining that with climate-model projections of ice-free days to 2100 they found that, for almost all of the subpopulations, the time that the animals would be forced to fast would eventually exceed the time that they are capable of fasting. The animals would starve. Longer fasting time also means a shorter feeding period. Not only do the bears have to fast for longer and need more energy to get through this, they also have a harder time to accumulate this energy. While fasting, bears move as little as possible to conserve energy. But sea-ice loss and population declines require having to expend more energy searching for a mate and that also affects survival. Even under more modest warming projections, in which greenhouse gas emissions peak by 2040 and then begin to decline, many of the subgroups would still be wiped out. Over the years, polar bears have become a symbol both for those who argue that urgent action on global warming is needed and for those who claim that climate change is not happening or, at best, that the issue is overblown. Groups including the Cato Institute, a libertarian research organization that challenges aspects of climate change, have called concerns about the bears unwarranted, arguing that some research shows that the animals have survived repeated warm periods. But scientists say during earlier warm periods the bears probably had significant alternative food sources, notably whales, that they do not have today. Poignant images of bears on isolated ice floes or roaming land in search of food have been used by conservation groups and others to showcase the need for action to reduce warming. Occasionally, though, these images have been shown to be not what they seem. After a video of an emaciated bear picking through garbage cans in the Canadian Arctic was posted online by National Geographic in 2017, the magazine acknowledged that the bear’s condition might not be related to climate change. Scientists had pointed out that there was no way of knowing what was wrong with the bear; it might have been sick or very old. The new research did not include projections in which emissions were reduced drastically, said Cecilia M. Bitz, an atmospheric scientist at the University of Washington and an author of the study. The research needs to be able to determine the periods when sea ice would be gone from a particular region. Andrew Derocher, a polar bear researcher at the University of Alberta said the findings “are very consistent with what we’re seeing” from, for instance, monitoring the animals in the wild. “The study shows clearly that polar bears are going to do better with less warming,” he added. “But no matter which scenario you look at, there are serious concerns about conservation of the species. Of the 19 subpopulations, little is known about some of them, particularly those in the Russian Arctic. Of subpopulations that have been studied, some generally sub-populations in areas with less ice loss have shown little population decline so far. But others, notably in the southern Beaufort Sea off northeastern Alaska, and in the western Hudson Bay in Canada, have been severely affected by loss of sea ice. One analysis found that the Southern Beaufort Sea subpopulation declined by 40 percent, to about 900 bears, in the first decade of this century (2000-2010) . Derocher said one drawback with studies like these is that, while they can show the long-term trends, it becomes very difficult to model what is happening from year to year. Polar bear populations can be very susceptible to drastic year-to-year changes in conditions, he said. “One of the big conservation challenges is that one or two bad years can take down a sub-population that is healthy and push it to really low levels.

CRITICAL COMMENTARY

BIAS IN THE RESEARCH QUESTION AND METHODOLOGY INCLUDES AN EXCLUSIVE FOCUS ON SEA ICE EXTENT AS THE ONLY DETERMINANT OF POLAR BEAR SUB-POPULATION DYNAMICS: As seen in the variables listed below that are known to affect polar bear subpopulation dynamics, it is a gross over-simplification to interpret these dynamics purely in terms of summer minimum sea ice extent. Human predation of polar bears in terms of hunting for food and hide has been a feature of polar bear subpopulation dynamics (PBSPD) for thousands of years. Its intensity increased sharply 500 years ago when commercial bear hide trade boomed and again 70 years ago when snowmobiles, speed boats, and aircraft were employed in the post war explosion of the bear hide business. It is widely believed that polar bear hunting has now been banned but this is not true outside of Norway and some regions of Siberia where some restrictions have been placed on polar bear hunting. Native Arctic humans that have always hunted polar bears for food, clothing, and other purposes have no restrictions. However, polar bear hunting by outsiders is restricted by an international agreement that forbids the use of snowmobiles, speedboats, and aircraft in these hunts. This agreement does not prohibit hunting of polar bears for hide. Non-human predation: in addition to human predation, we find that young polar bears cubs are hunted by wolves and by adult polar bears for food. Starving nursing mothers may also feast on her cubs. In general Intra-species predation is prevalent among polar bears where strong young males may feast on cubs or weaker females. Also, fighting among males for mating partners or hunting rights may also result in death and cannibalism. Polar bears may look cute and cuddly but they are not as nice as they look. These behaviors of Polar Bears (and bears in general), though well known, is treated as anomalous in climate science research and attributed to AGW climate change by way of sea ice loss. See for example, Amstrup and Stirling 2006 in the bibliography below.

NON-CLIMATE FACTORS IN POLAR BEAR SUB-POPULATION DYNAMICS

LONGEVITY: Generally 20 to 30 years but as low as 15 and as high as 32. You can tell how old it is by looking at a thin slice of tooth and counting the layers. PREDATION: Adult polar bears have no predators except other polar bears but cubs less than one year old sometimes are prey to wolves and other carnivores and newborns may be eaten by the polar bears themselves especially if the mother is starved. INTRA-SPECIES PREDATION: This does not happen a lot but males fight over females and will kill the competition to get the lady he wants. In extreme hunger conditions, male polar bears may attack, kill, and eat female polar bears. This is not a normal behavior pattern but it does happen. HUMAN PREDATION: Humans have hunted, killed, and eaten Polar bears for thousands of years. Arctic people have traditionally hunted polar bears for food, clothing, bedding, and religious purposes. More recently commercial hunting for polar bear hides got started more than 500 years ago. There was a sharp rise in the kill rate in the 1950s when modern equipment such as snowmobiles, speedboats, and aircraft were employed in the polar bear hide trade. The hunt expanded to what was eventually viewed as a threat to the survival of the species and an International Agreement was signed in 1973 to ban the use of aircraft and speed boats in polar bear hunts although hunting continued to the extent that they were still the leading cause of polar bear mortality. It is popularly believed that polar bear hunting is now banned. STATE OF HUMAN PREDATION: Today, polar bears are hunted by native arctic populations for food, clothing, handicrafts, and sale of skins. Polar bears are also killed in defense of people or property. However, hunting is strictly regulated in Canada, Greenland, Norway, and Russia. In Norway and Russia hunting polar bears is banned. CLIMATE CHANGE IMPACT: Increasing temperatures are associated with a decrease in sea ice both in terms of how much sea ice there is and how many months a year they are there. Polar bears use sea ice as a platform to prey mainly on ringed and bearded seals. Therefore, a decline in sea ice extent reduces the polar bear’s ability to hunt for seals and can cause bears to starve or at least to be malnourished. YOUNG POLAR BEARS: Subadults are inexperienced hunters, and often are chased from kills by larger adults. OLD & WEAK BEARS are also susceptible to starvation for the same reason. They can’t compete with younger and stronger bears. In hunt constrained situations, as in limited sea ice, kids and seniors starve first. Climate change scientists have found (bibliography in related post) that polar bear subpopulations have shown increasing evidence of food deprivation including an increase in the number of underweight or starving bears, smaller bears, fewer cubs, and cubs that don’t survive into adulthood partially because in food constrained situations cubs are more likely to be eaten by adult polar bears. This takes place in areas that are experiencing shorter hunting seasons with limited access to sea ice. These conditions limit the bears’ ability to hunt for seals.

The implication for climate impact studies is that a comparison of polar bear subpopulation counts across time at brief decadal time scales, in and of itself, may not have a climate change sea ice interpretation because of the number of other variables involved in these dynamics.

See for example the bibliography below where papers like Bromaghin etal 2015, though they carry out the analysis based on the sea ice climate change as the cause of observed population dynamics, they also admit that there are other drivers of polar bear sub-population dynamics that have not been included in the analysis. Two other characteristics of these studies are that (1) changes at short time scales of 5 years or less are interpreted as trends related to AGW global warming and sea ice decline; and (2) a pattern in research methodology of first identifying some changes at these short time scales and then finding ways to attribute the observed changes to sea ice dynamics and therefore to AGW climate change. (see for example Pagano 2012).

A confirmation bias methodology is the norm. Few papers express that clearly but in papers such as Pongracz and Derocher 2017 the authors admit that their research is motivated and guided by the assumption that “Climate change is altering habitats and causing changes to species behaviors and distributions. Rapid changes in Arctic sea ice ecosystems have increased the need to identify critical habitats for conservation and management of species such as polar bears” and that they therefore examined the distribution of adult female and subadult male and female polar bears and interpreted the terrestrial and sea ice areas used as summer refugia in terms of sea ice melt.

Yet another factor is the assumption that observed changes in September minimum sea ice extent are driven by global warming such that they can be moderated by taking climate action by reducing or eliminating the use of fossil fuels. This critical causal relationship is simply assumed in climate science. However, as shown in related posts: LINK: https://tambonthongchai.com/2020/09/25/list-of-arctic-sea-ice-posts/ , detrended correlation analysis does not show that September minimum Arctic sea ice extent is responsive to air temperature above the Arctic. This means that we have no evidence to support the assumption that fossil fuel emissions cause lower September minimum sea ice extent and that this trend can be attenuated by taking climate action. Thus, in short, the two critical causations in polar bear research by climate scientists, (1) that fossil fuel emissions lower September minimum sea ice extent and (2) that polar bear sub-population dynamics are the creation of changes in September minimum sea ice extent, are simply assumed with no empirical evidence provided to support them.

In this context it should be noted that the Arctic is geologically very active with significant mantle plume activity and ocean floor volcanism as described in a related post: LINK: https://tambonthongchai.com/2019/07/01/arctic/ . It is therefore necessary to take these effects into consideration in the study of ice melt events in the Arctic instead of the extreme effort in climate science to explain all Arctic ice melt phenomena in terms of the atmosphere.

SUMMARY: Whether the polar bears are in trouble is not the issue. The only issue is whether their trouble if any is caused by fossil fuel emissions and whether it can be moderated by taking climate action. This important aspect of the polar bear issue in climate science is missing from polar bear research carried out by climate science apparently to provide the needed motivation for climate action in a campaign against fossil fuels.

RINGED SEALS

THE RELEVANT BIBLIOGRAPHY

- Regehr, Eric V., et al. “Survival and breeding of polar bears in the southern Beaufort Sea in relation to sea ice.” Journal of animal ecology 79.1 (2010): 117-127. Observed and predicted declines in Arctic sea ice have raised concerns about marine mammals. In May 2008, the US Fish and Wildlife Service listed polar bears (Ursus maritimus) – one of the most ice‐dependent marine mammals – as threatened under the US Endangered Species Act. We evaluated the effects of sea ice conditions on vital rates (survival and breeding probabilities) for polar bears in the southern Beaufort Sea. Although sea ice declines in this and other regions of the polar basin have been among the greatest in the Arctic, to date population‐level effects of sea ice loss on polar bears have only been identified in western Hudson Bay, near the southern limit of the species’ range. We estimated vital rates using multistate capture–recapture models that classified individuals by sex, age and reproductive category. We used multimodel inference to evaluate a range of statistical models, all of which were structurally based on the polar bear life cycle. We estimated parameters by model averaging, and developed a parametric bootstrap procedure to quantify parameter uncertainty. In the most supported models, polar bear survival declined with an increasing number of days per year that waters over the continental shelf were ice free. In 2001–2003, the ice‐free period was relatively short (mean 101 days) and adult female survival was high (0·96–0·99, depending on reproductive state). In 2004 and 2005, the ice‐free period was longer (mean 135 days) and adult female survival was low (0·73–0·79, depending on reproductive state). Breeding rates and cub litter survival also declined with increasing duration of the ice‐free period. Confidence intervals on vital rate estimates were wide. The effects of sea ice loss on polar bears in the southern Beaufort Sea may apply to polar bear populations in other portions of the polar basin that have similar sea ice dynamics and have experienced similar, or more severe, sea ice declines. Our findings therefore are relevant to the extinction risk facing approximately one‐third of the world’s polar bears.

- Schliebe, S., et al. “Effects of sea ice extent and food availability on spatial and temporal distribution of polar bears during the fall open-water period in the Southern Beaufort Sea.” Polar Biology 31.8 (2008): 999-1010. We investigated the relationship between sea ice conditions, food availability, and the fall distribution of polar bears in terrestrial habitats of the Southern Beaufort Sea via weekly aerial surveys in 2000–2005. Aerial surveys were conducted weekly during September and October along the Southern Beaufort Sea coastline and barrier islands between Barrow and the Canadian border to determine polar bear density on land. The number of bears on land both within and among years increased when sea-ice was retreated furthest from the shore. However, spatial distribution also appeared to be related to the availability of subsistence-harvested bowhead whale carcasses and the density of ringed seals in offshore waters. Our results suggest that long-term reductions in sea-ice could result in an increasing proportion of the Southern Beaufort Sea polar bear population coming on land during the fall open-water period and an increase in the amount of time individual bears spend on land.

- Hunter, Christine M., et al. “Polar bears in the Southern Beaufort Sea II: Demography and population growth in relation to sea ice conditions.” USGS Alaska Science Center, Anchorage, Administrative Report (2007). This is a demographic analysis of the southern Beaufort (SB) polar bear population. The analysis uses a female-dominant stage-classified matrix population model in which individuals are classified by age and breeding status. Parameters were estimated from capture-recapture data collected between 2001 and 2006. We focused on measures of long-term population growth rate and on projections of population size over the next 100 years. We obtained these results from both deterministic and stochastic demographic models. Demographic results were related to a measure of sea ice condition, ice(t), defined as the number of ice-free days, in year t, in the region of preferred polar bear habitat. Larger values of ice(t) correspond to lower availability of sea ice and longer ice-free periods. Uncertainty in results was quantified using a parametric bootstrap approach that includes both sampling uncertainty and model selection uncertainty. Deterministic models yielded estimates of population growth rate λ, under low ice conditions in 2001–2003, ranging from 1.02 to 1.08. Under high ice conditions in 2004–2005, estimates of λ ranged from 0.77 to 0.90. The overall growth rate estimated from a time-invariant model was about 0.997; i.e., a 0.3% decline per year. Population growth rate was most elastic to changes in adult female survival, and an LTRE analysis showed that the decline in λ relative to 2001 conditions was primarily due to reduction in adult female survival, with secondary contributions from reduced breeding probability. Based on demographic responses, we classified environmental conditions into good (2001– 2003) and bad (2004–2005) years, and used this classification to construct stochastic models. In those models, good and bad years occur independently with specified probabilities. We found that the stochastic growth rate declines with an increase in the frequency of bad years. The observed frequency of bad years since 1979 would imply a stochastic growth rate of about -1% per year. Deterministic population projections over the next century predict serious declines unless conditions typical of 2001–2003 were somehow to be maintained. Stochastic projections predict a high probability of serious declines unless the frequency of bad ice years is less than its recent average. To explore future trends in sea ice, we used the output of 10 selected general circulation models (GCMs), forced with “business as usual” greenhouse gas emissions, to predict values of ice(t) until the end of the century. We coupled these to the stochastic demographic model to project population trends under scenarios of future climate change. All GCM models predict a crash in the population within the next century, possibly preceded by a transient population increase. The parameter estimates on which the demographic models are based have high levels of uncertainty associated with them, but the agreement of results from different statistical model sets, deterministic and stochastic models, and models with and without climate forcing, speaks for the robustness of the conclusions.

- Bromaghin, Jeffrey F., et al. “Polar bear population dynamics in the southern Beaufort Sea during a period of sea ice decline.” Ecological Applications 25.3 (2015): 634-651. In the southern Beaufort Sea of the United States and Canada, prior investigations have linked declines in summer sea ice to reduced physical condition, growth, and survival of polar bears. Combined with projections of population decline due to continued climate warming and the ensuing loss of sea ice habitat, those findings contributed to the 2008 decision to list the species as threatened under the U.S. Endangered Species Act. Here, we used mark–recapture models to investigate the population dynamics of polar bears in the southern Beaufort Sea from 2001 to 2010, years during which the spatial and temporal extent of summer sea ice generally declined. Low survival from 2004 through 2006 led to a 25–50% decline in abundance. We hypothesize that low survival during this period resulted from (1) unfavorable ice conditions that limited access to prey during multiple seasons; and possibly, (2) low prey abundance. For reasons that are not clear, survival of adults and cubs began to improve in 2007 and abundance was comparatively stable from 2008 to 2010, with ~900 bears in 2010 (90% CI 606–1212). However, survival of subadult bears declined throughout the entire period. Reduced spatial and temporal availability of sea ice is expected to increasingly force population dynamics of polar bears as the climate continues to warm. However, in the short term, our findings suggest that factors other than sea ice can influence survival. A refined understanding of the ecological mechanisms underlying polar bear population dynamics is necessary to improve projections of their future status and facilitate development of management strategies.

- Stirling, Ian, et al. “Unusual predation attempts of polar bears on ringed seals in the southern Beaufort Sea: possible significance of changing spring ice conditions.” Arctic (2008): 14-22. In April and May 2003 through 2006, unusually rough and rafted sea ice extended for several tens of kilometres offshore in the southeastern Beaufort Sea from about Atkinson Point to the Alaska border. Hunting success of polar bears seeking seals was low despite extensive searching for prey. It is unknown whether seals were less abundant in comparison to other years or less accessible because they maintained breathing holes below rafted ice rather than snowdrifts, or whether some other factor was involved. However, we found 13 sites where polar bears had clawed holes through rafted ice in attempts to capture ringed seals in 2005 through 2006 and another site during an additional research project in 2007. Ice thickness at the 12 sites that we measured averaged 41 cm. These observations, along with cannibalized and starved polar bears found on the sea ice in the same general area in the springs of 2004 through 2006, suggest that during those years, polar bears in the southern Beaufort Sea were nutritionally stressed. Searches made farther north during the same period and using the same methods produced no similar observations near Banks Island or in Amundsen Gulf. A possible underlying ecological explanation is a decadal-scale downturn in seal populations. But a more likely explanation is major changes in the sea-ice and marine environment resulting from record amounts and duration of open water in the Beaufort and Chukchi seas, possibly influenced by climate warming. Because the underlying causes of observed changes in polar bear body condition and foraging behavior are unknown, further study is warranted.

- Pagano, Anthony M., et al. “Long-distance swimming by polar bears (Ursus maritimus) of the southern Beaufort Sea during years of extensive open water.” Canadian Journal of Zoology 90.5 (2012): 663-676. Polar bears depend on sea ice for catching marine mammal prey. Recent sea-ice declines have been linked to reductions in body condition, survival, and population size. Reduced foraging opportunity is hypothesized to be the primary cause of sea-ice-linked declines, but the costs of travel through a deteriorated sea-ice environment also may be a factor. We used movement data from 52 adult female polar bears wearing GPS collars, including some with dependent young, to document long-distance swimming (>50 km) by polar bears in the southern Beaufort and Chukchi seas. During 6 years (2004–2009), we identified 50 long-distance swims by 20 bears. Swim duration and distance ranged from 0.7 to 9.7 days (mean = 3.4 days) and 53.7 to 687.1 km (mean = 154.2 km), respectively. Frequency of swimming appeared to increase over the course of the study. We show that adult female polar bears and their cubs are capable of swimming long distances during periods when extensive areas of open water are present. However, long-distance swimming appears to have higher energetic demands than moving over sea ice. Our observations suggest long-distance swimming is a behavioral response to declining summer sea-ice conditions.

- Pongracz, Jodie D., and Andrew E. Derocher. “Summer refugia of polar bears (Ursus maritimus) in the southern Beaufort Sea.” Polar Biology 40.4 (2017): 753-763. Climate change is altering habitats and causing changes to species behaviors and distributions. Rapid changes in Arctic sea ice ecosystems have increased the need to identify critical habitats for conservation and management of species such as polar bears. We examined the distribution of adult female and subadult male and female polar bears tracked by satellite telemetry (n = 64 collars) in the southern Beaufort Sea, Canada, to identify summer refugia in 2007–2010. Using utilization distributions, we identified terrestrial and sea ice areas used as summer refugia when nearshore sea ice melted. Habitat use areas varied between months, but interannual variation was not significant. Overall, bears made high use of ice over shallow waters, and bears that remained near terrestrial areas used sea ice (presumably to hunt from) when it was available. The majority of the bears remained on sea ice during summer and used the edge of the pack ice most notably west of Banks Island, Canada. A mean of 27 % (range 22–33 %) of bears used terrestrial areas in Alaska and use was concentrated near the remains of subsistence harvested bowhead whales (Balaena mysticetus). Energetic expenditure is anticipated to increase as bears are required to travel further on a seasonal basis.

- Amstrup, Steven C., et al. “Recent observations of intraspecific predation and cannibalism among polar bears in the southern Beaufort Sea.” Polar Biology 29.11 (2006): 997. Intraspecies killing has been reported among polar bears, brown bears, and black bears. Although cannibalism is one motivation for such killings, the ecological factors mediating such events are poorly understood. Between 24 January and 10 April 2004, we confirmed three instances of intraspecies predation and cannibalism in the Beaufort Sea. One of these, the first of this type ever reported for polar bears, was a parturient female killed at her maternal den. The predating bear was hunting in a known maternal denning area and apparently discovered the den by scent. A second predation event involved an adult female and cub recently emerged from their den, and the third involved a yearling male. During 24 years of research on polar bears in the southern Beaufort Sea region of northern Alaska and 34 years in northwestern Canada, we have not seen other incidents of polar bears stalking, killing, and eating other polar bears. We hypothesize that nutritional stresses related to the longer ice-free seasons that have occurred in the Beaufort Sea in recent years may have led to the cannibalism incidents we observed in 2004.

THIS POST IS A CRITICAL REVIEW OF THE 2020 CLIMATE CHANGE REPORT ISSUED BY THE WORLD METEOROLOGICAL ORGANIZATION (WMO) AND THE UNITED NATIONS.

RELATED POST ON THE WMO: THE WMO CLIMATE ALARM OF 2019: https://tambonthongchai.com/2019/09/25/wmo2019/

PART-1: WHAT THE 2020 WMO CLIMATE REPORT SAYS

- A wide-ranging UN climate report, released on Tuesday, shows that climate change is having a major effect on all aspects of the environment, as well as on the health and wellbeing of the global population. The report, The WMO Statement on the State of the Global Climate in 2019, which is led by the UN weather agency (World Meteorological Organization), contains data from an extensive network of partners. It documents physical signs of climate change – such as increasing land and ocean heat, accelerating sea level rise and melting ice – and the knock-on effects on socio-economic development, human health, migration and displacement, food security, and land and marine ecosystems.

- Writing in the foreword to the report, UN chief António Guterres warned that the world is currently way off track meeting either the 1.5°C or 2°C targets that the Paris Agreement calls for, referring to the commitment made by the international community in 2015, to keep global average temperatures well below 2°C above pre-industrial levels.

- A new annual global temperature record is likely in the next five years. It is a matter of time. Petteri Taalas, Secretary-General, WMO, writes: Several heat records have been broken in recent years and decades: the report confirms that 2019 was the second warmest year on record, and 2010-2019 was the warmest decade on record. Since the 1980s, each successive decade has been warmer than any preceding decade since 1850. The warmest year so far was 2016, but that could be topped soon, said WMO Secretary-General Petteri Taalas.

- Given that greenhouse gas levels continue to increase, the warming will continue. A recent decadal forecast indicates that a new annual global temperature record is likely in the next five years. It is a matter of time”, added the WMO Secretary-General. In an interview with UN News, Mr. Taalas said that, there is a growing understanding across society, from the finance sector to young people, that climate change is the number one problem mankind is facing today.

- There are plenty of good signs that we have started moving in the right direction”. “Last year emissions dropped in developed countries, despite the growing economy, so we have been to show that you can detach economic growth from emission growth. The bad news is that, in the rest of the world, emissions grew last year. So, if we want to solve this problem we have to have all the countries on board”. (but we don’t).

- Mr. Taalas added that countries still aren’t fulfilling commitments they made at the UN Paris climate conference in 2015, leaving the world currently on course for a four to five degree temperature increase by the end of this century: “there’s clearly a need for higher ambition levels if we’re serious about climate mitigation”.

- Mr. Taalas noted that 2020 has seen the warmest January recorded so far, and that winter has been “unseasonably mild” in many parts of the northern hemisphere.

- Ongoing warming in Antarctica saw large-scale ice melt and the fracturing of a glacier, with repercussions for sea level rise, and carbon dioxide emissions spiked following the devastating Australian bushfires, which spread smoke and pollutants around the world.

- Australia’s 2018-2019 summer was the hottest ever recorded, reaching a peak of 41.9 degrees centigrade on December 18. Australia’s seven hottest days on record, and nine of the 10 hottest, occurred in 2019. The country was not the only place affected by extreme heat, or wildfires.

- Temperature records were broken in several European countries, including France, Germany, and the United Kingdom. Even Nordic countries saw record-breaking temperatures, including Finland, which registered a high of 33.2 degrees in the capital, Helsinki.

- Several high latitude regions, including Siberia and Alaska, saw high levels of fire activity, as did some parts of the Arctic, where it was previously extremely rare.

- Indonesia and neighbouring countries had their most significant fire season since 2015, and total fire activity in South America was the highest since 2010.

- There have been widespread impacts of ocean warming. Ice floating on the waters of Prince Gustav Channel in Antarctica, where an ice shelf (Prince Gustav Ice Shelf) of more than 28 km used to exist. The ice shelf has since retreated and collapsed.

- Greenhouse gas emissions continued to grow in 2019, leading to increased ocean heat, and such phenomena as rising sea levels, the altering of ocean currents, melting floating ice shelves, and dramatic changes in marine ecosystems.

- The ocean has seen increased acidification and deoxygenation, with negative impacts on marine life, and the wellbeing of people who depend on ocean ecosystems.

- At the poles, sea ice continues to decline, and glaciers shrunk yet again, for the 32nd consecutive year. Between 2002 and 2016, the Greenland ice sheet lost some 260 Gigatonnes of ice per year, with a peak loss of 458 Gigatonnes in 2011/12. The 2019 loss of 329 Gigatonnes, was well above average.

- In 2019, extreme weather events, some of which were unprecedented in scale, took place in many parts of the world. The monsoon season saw rainfall above the long-term average in India, Nepal, Bangladesh and Myanmar, and flooding led to the loss of some 2,200 lives in the region.

- Parts of South America were hit by floods in January, whilst Iran was badly affected in late March and early April. In the US, total economic losses from flooding were estimated at around $20 billion.